Posted by Tech.us Category: Big Data AI Artificial Intelligence Deep Learning

We are a team of technology experts who are passionate about what we do. We LOVE our customers. We LOVE technology. We LOVE helping you grow your business with technology.

Artificial Intelligence Services

Machine Learning Services

Generative Al Services

Robotic Process Automation

Natural Language Processing

Chatbot Development Services

Enterprise AI Services

Data Annotation Services

MLOps Services

IoT Services

Data Mining Services

Computer Vision Services

LLM Development Services

AI Agents

Agentic AI Development

Custom Software Development

Enterprise Software Solutions

Software Development Services

Website Development Services

Software Product Development Services

SaaS Development Services

Mobile App Development Services

Custom Mobile App Development

IOS App Development

Android App Development

Enterprise Mobile App Development

Hybrid App Development

Software Development Outsourcing

Dedicated Development Team

Staff Augmentation Services

IT Outsourcing Services

Data Analytics Services

Data Analytics Consulting Services

Business Intelligence Solutions

Software Modernization

Application Modernization Services

Legacy System Modernization

IT Security Solutions

Cyber Security Solutions

Cyber Security Managed Services

HIPAA Compliance Cyber Security

Cloud Application Development

Custom Web Application Development

Cloud Consulting Services

AWS Cloud Consulting Services

Enterprise Cloud Computing

Azure Cloud Migration Services

POPULAR POSTS

01

How To Improve Document Processing Accuracy Using Document AI

02

The Guide to Chatbot Development & What to Seek while Hiring a Company

03

11 Proven Benefits of AI Chatbots for Businesses in 2025

04

Understanding Natural Language Processing: The What? The How? and The Why?

05

What Digital Transformation Means for Businesses in 2026

Posted by Tech.us Category: Big Data AI Artificial Intelligence Deep Learning

Artificial intelligence (AI) has been a huge topic recently. You will find tons of articles about machine learning (ML), neural networks, and the numerous things AI can do, such as deep fakes, speech recognition, and autonomous driving.

It deserves the attention it is receiving. After all, properly utilizing this technology can allow your business to flourish. However, before you even apply it or plan to use AI in your company, you must know its history and evolution.

Between the 1940s and 1950s, groups of researchers composed of scientists, mathematicians, engineers, psychologists, political scientists, and economists discussed the possibility of creating an artificial brain. The discussion led to the creation of the academic discipline for artificial intelligence.

The notion of artificial intelligence became more popular when Alan Turing published his paper about machines that can think. In his paper, he also devised the famous Turing test for AI. The paper pushed people to take artificial intelligence more seriously.

The development of game AI also started in the 1950s when Christopher Strachey and Dietrich Prinz developed code for AI checkers and chess opponents. The results were AI that could challenge amateur players to play as if they were competing against another person.

From the late 1950s until the mid-1970s, the world has made significant progress in artificial intelligence. At this point, AI programs can solve math word problems and even learn how to speak English.

Despite the progress, the development of artificial intelligence was hampered by technical limitations.

For example, an AI program can learn how to escape a simple maze by listing all the possible moves and testing them to find the correct answer. However, if the maze is too complicated, it can result in an astronomical number of moves. The AI failed to solve those kinds of mazes because the computer’s capacity is not enough to remember all the moves calculated by the program.

This era also saw the birth of ELIZA, one of the first AI programs that can converse with people. Like other AI programs in its time, it was limited by the computer it used to operate.

Unfortunately, even if ELIZA sounded like a real human, it had no idea what it was talking about. Because of this, ELIZA was more renowned as the first chatbot.

Of course, AI researchers and computer scientists also faced failures, but they were not enough to discourage them from pursuing artificial intelligence further. They have predicted a lot of things with optimistic timeframes. However, most of them did not come true until 40 years later.

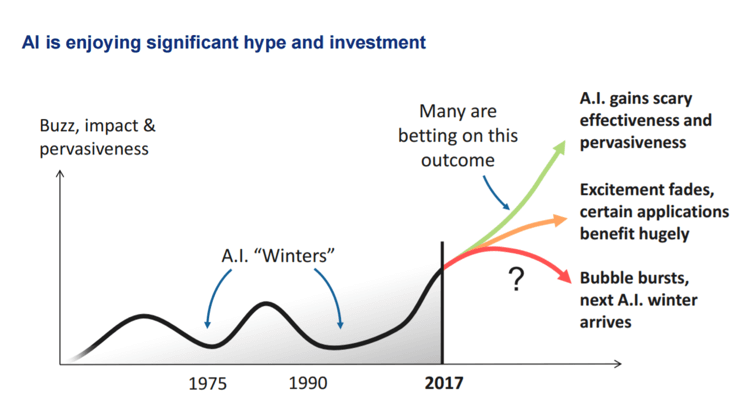

After the golden years, the development of artificial intelligence slowed down. Many private entities and governments pooled and poured massive amounts of resources into research teams to advance the technology but to no avail. Only a few discoveries and innovations saw the light of day.

People in the field refer to this era as AI winters. People who remained in the industry were often referred to as survivors. Even if they did not achieve groundbreaking results, these winters have contributed a lot to artificial intelligence research.

First, researchers discovered that the computers and machines available to them right now are the prime reason they cannot push forward with their research.

Second, they understood that they are still too far away from creating a machine that can think like humans. Proving complicated theorems can be easy for an AI and can be difficult for a human, but recognizing a face can be impossible for AI and is a simple task when done by a human—this is also known as Moravec’s paradox.

Third, artificial intelligence requires an immense amount of information to achieve proper reasoning and common sense.

The progress in the field of artificial intelligence started moving again in the 1990s up until the 2010s. At that point, artificial intelligence was already 50 years old.

The most significant difference between the 1950s and the 1990s is that microprocessors have become widely available and are comparatively more powerful than those used by computers.

Even though researchers had more computing power at that time, the artificial intelligence field’s progress was still slow. However, it was not something that they fretted over.

With the popularity and reliability of Moore’s Law, researchers and programmers knew that they only needed to wait a few more years to have access to much faster and more powerful computers that would allow them to progress efficiently.

Knowing the limitations and the problems researchers encountered during the advent of artificial intelligence, you can say that researchers can overcome most of those limitations today.

First, computers are cheap and fast. Compared to before, microprocessors can perform more than three billion instructions per second. And with the advent of Tensor Processing Units (TPUs), AI can fully leverage neural network machine learning.

Second, computers can recognize a face and understand speech within seconds with new computer peripherals and technology. It also helps that GPUs nowadays are designed to be usable in machine and deep learning, and they are useful when it comes to the development of using AI with physics.

Third, big data is here. Thanks to the internet and the numerous companies that collected a massive amount of information over the years, artificial intelligence researchers have many big data to choose from to use with their programs for deep learning.

Today’s artificial intelligence technology has come a long way. For one, AI chess programs can only hope to win against professional chess players, but in 1997, Deep Blue defeated a world champion. Before, an AI program could only solve mazes. Now, it can perform pathfinding and autonomous driving.

With the help of deep and machine learning, it is still not the end of AI’s evolution. Artificial intelligence only needs a few years more before it can become part of human society. It is already seen as an integral component in successful modern companies and corporations. And regardless of the industry you are in, artificial intelligence will surely find a place in your business.

How CEOs Can (And Should) Ensure The Success Of AI...

How to Successfully Outsource PHP Development Projects

Get Free Tips

NEWSLETTER

Get Free Tips

Submit to our newsletter to receive exclusive stories delivered to vou inbox!

Thanks for submitting the form.

RECENT POSTS

7 Signs Your Business Operations Have Outgrown SaaS and Need Custom...

What Digital Transformation Means for Businesses in 2026

When Does a Mobile App Become a Competitive Advantage for Businesses?

How to Choose the Right AI Development Partner for Enterprise AI Systems

11 Critical Questions to Ask Before Hiring a Custom Software Development...

We are a team of technology experts who are passionate about what we do. We LOVE our customers. We LOVE technology. We LOVE helping you grow your business with technology.

Our Services

Talk to US